SICP stands for “Structure and Interpretation of Computer Programs”, and is an introductory computer science book, written by Hal Ableson and Gerald Sussman for their introductory computer science course given at MIT from 1981 until 2007. A professional recording done in 1986 and is also available for download online, as is the book itself.

Why so much controversy?

I had missed these comments, and the resultant reddit gossiping – a continuation of the previously argument between two programming languages subgroups. Guido tweeted as follows:

As I feared, the copy of SICP is from someone who believes I am not properly educated. No thanks for the backhanded insult.

I have to admit, sending someone an introductory computer science text can carry a lot of connotations, whether indented or otherwise. Guido gave his two cents on the book, tacking it on to a review of some intro programming books. A little uproar followed in kind.

My impression

I experienced SICP only this spring – I had heard of its controversial nature second hand, having gone to a Java school. I noticed a coworker had a copy, so I borrowed it and gave it a weekend skim.

It really is an introductory text, but it is old style in expecting the reader to be really smart and work at the stuff. I wouldn’t call it unforgiving though – it doesn’t do any hand holding, but at the same time, it doesn’t give you any problems that you shouldn’t be able to solve. The examples are practical instead of contrived, but still idealized and simplified. In case you can’t tell already, let me translate: I was hooked.

Unlike van Rossum, I very much enjoyed the book. I also like Coke. Maybe he likes Pepsi. What’s the big deal?

It’s not just a book… it’s an idea

If you watch the video lectures, you might see the same thing as me: I get the sense that SICP isn’t just a book. Or just instruction. It is those things, but it’s also a particular manner of thinking, or rather a particular improvement to our manners of expressing our thoughts about software. In the original first edition preface, the authors write (emphasis mine):

First, we want to establish the idea that a computer language is not just a way of getting a computer to perform operations but rather that it is a novel formal medium for expressing ideas about methodology. Thus, programs must be written for people to read, and only incidentally for machines to execute. Second, we believe that the essential material to be addressed by a subject at this level is […] the techniques used to control the intellectual complexity of large software systems.

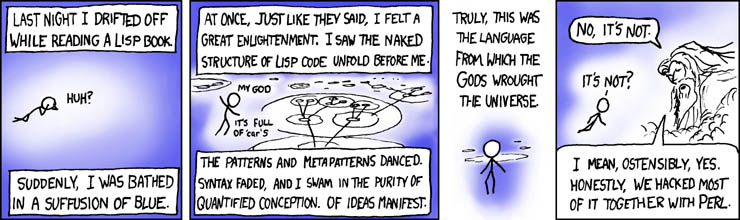

The idea, premise, or belief that they demonstrated to me in their lectures is that through abstraction you can manage software complexity, and build anything you can imagine with software. More importantly, you can design that software in such a way that the code can, piece by piece, be understood and commanded by anyone suitably knowledgable in the art. The lectures eventually climax with a simplified, but complete, model of the language itself, called the metacircular intepreter. Using little or no slight of hand, each piece can be understood and composed independently. By comprehending the incantations you can create new ones, without bound, like magic.

I’m not inventing the magic metaphor– Sussman had a cape and wizard’s staff at the end of that lecture.

It’s a philosophy…

Seriously though, it prescribes a model of reality and method of reason about it. And like many philosophies, you can make a way of life or practice out of it. Some people will be really enthusiastic about it, having found much benifit, happiness, or both from it. Those proponents can expound on its virtues, and the general applicability of those virtues may make it sound as if it’s a cure all.

And likewise, there will be opponents that have profound distaste for the it. They may object to the reasoning or axioms of it, or the results of it, or both. In some way it appears to conflicts with their own philosophy; whether the conflict is real or only superficial is not consequential for making them opponents.

I get the impression that many opponents of SICP are also proponents of software abstraction, but come about it a different way or understand the thing differently. We’re not really at odds with each other most of the time, but more-so are experiencing a communication failure.

SICP certainly does have an agenda. But we are seeing it differently.

Epilogue

I can’t claim any philosophic neutrality what-so-ever on SICP.

I finished watching the lectures this past week, having read the book cover to cover the week prior after ordering it on Amazon. What can I say, I’ve joined the order of the lambda.

I think Peter Norvig put it best in his review of SICP on Amazon:

I think its fascinating that there is such a split between those who love and hate this book. […]

Those who hate SICP think it doesn’t deliver enough tips and tricks for the amount of time it takes to read. But if you’re like me, you’re not looking for one more trick, rather you’re looking for a way of synthesizing what you already know, and building a rich framework onto which you can add new learning over a career. That’s what SICP has done for me.

Cheers.